AI - Tool or Threat?

As opaque artificial intelligence (AI) systems start to perform tasks at human-level proficiency, should we be excited or scared?

Artificial Intelligence (AI) is clearly having its moment in the media limelight. Across news outlets and social media, there is a steady stream of how-to posts, articles, and op-eds related to AI.

It's no surprise that my previous article too was on artificial intelligence, where I examined the generative AI frenzy and highlighted how this new technology will disrupt digital media and productivity.

It seems like everyone has something to say about AI and opinions are polarized, even among experts. Some are hailing AI as the next big productivity revolution [Bill Gates], while others are calling AI one of the greatest threats to humanity and asking for a pause in its development [Future of Life Institute].

To appreciate more deeply why there are such contrasting opinions on the benefits and risks of AI, it is important to understand that something fundamental has changed in the field, namely how large AI models are now being built and trained.

First described in a 2017 paper from Google, a new class of AI learning models called "transformers" have emerged in the past few years. These AI models are unique in their ability to contextualize words, sentences, images, and inputs to generate nuanced and accurate responses.

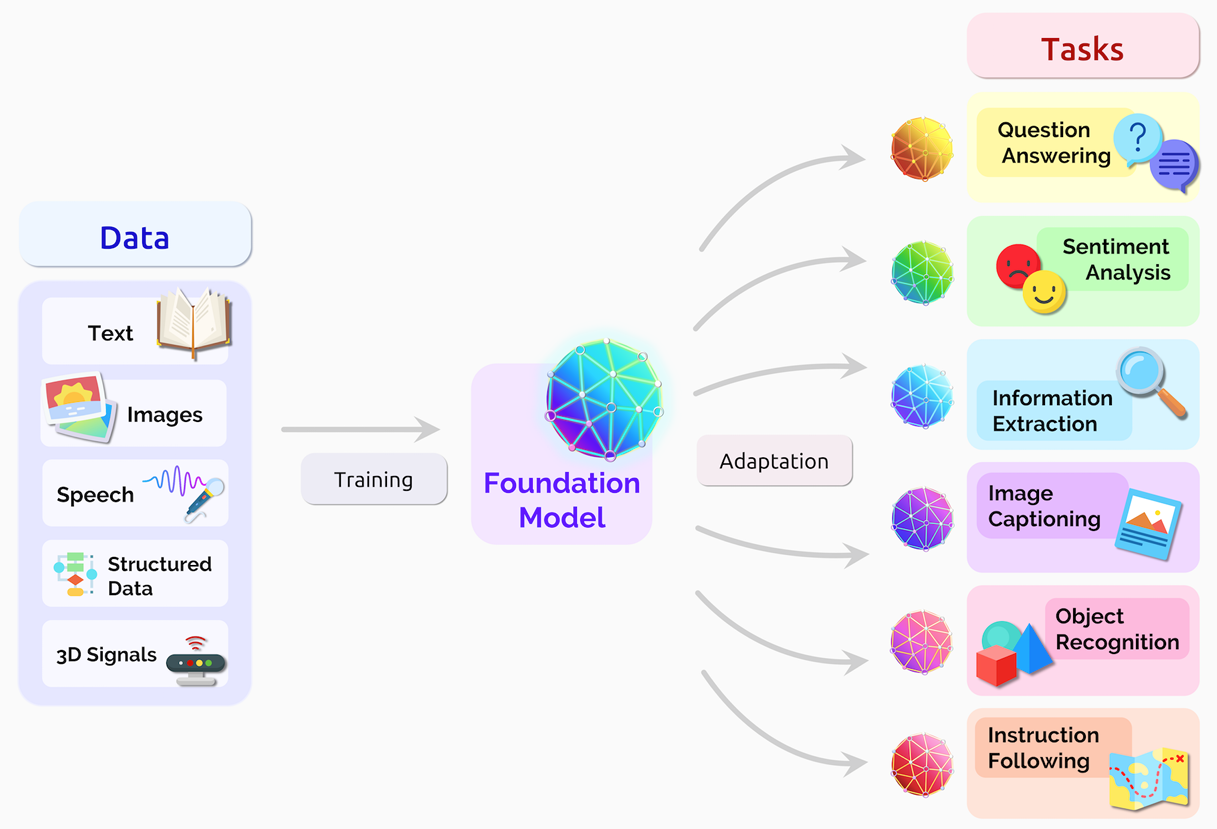

In a 2021 paper, Stanford researchers coined the term “Foundation Model” for any AI system that can use large volumes of curated online information (books, social media, digital images, websites, blogs, forums, etc.) to train the underlying mathematical model that powers its abilities. These models are known as neural networks because they mimic how our brain stores and retrieves information.

AI models, after being trained on trillions of data points, can then be used to attain human-level performance on tasks, such as generating intelligent chat responses (ChatGPT), to creating original images from a text prompt (Midjourney).

Over the last few years, the sheer scale and scope of AI models have stretched our imagination of what is possible.

However, with billions of parameters contributing to the performance and output of these new AI systems, even their developers are unable to explain how the system works internally. Yet somehow, these new AI models are able to learn from input training data and produce impressive results as an output.

Next generation AI models largely operate as intelligent 'opaque boxes' that can be fine tuned and then deployed to perform a variety of tasks at a human-level proficiency.

Owing to this 'opaque box' approach, even an AI model trained on highly curated input data can produce unintended or undesirable outputs.

AI researchers are now using reinforcement learning mechanisms to further tweak these 'opaque box' models to make them safer and learn human preferences, so the AI system is able to make a distinction between preferred and undesirable results.

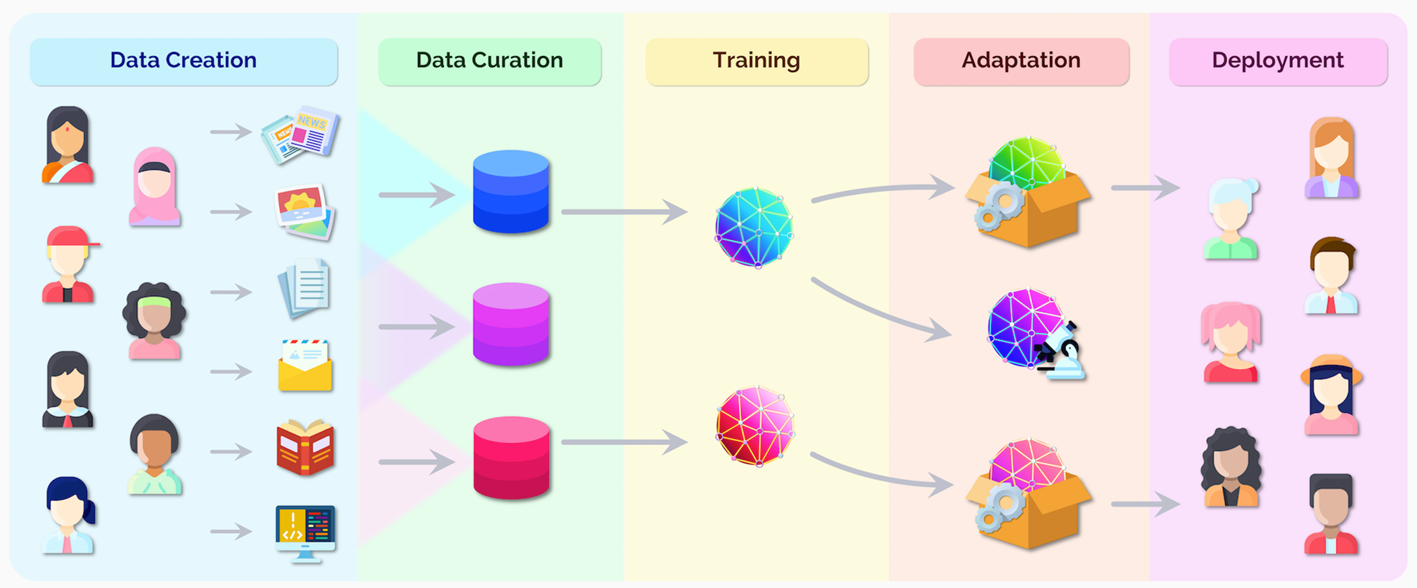

Ultimately, humans are critical bookends in the development pipeline for any AI system. Not only are people the upstream source of data that is used to train the AI model, but also downstream, people are the recipients of both the benefits and harms of the deployed AI system.

Since the new wave of AI models demonstrate fluency in human language and conversation, it has also led to some alarmist media coverage, from disturbing chats with AI bots to claims that an AI has come to life.

However, fluency in conversation does not mean these AI systems possess a fluency of understanding as well.

As humans, we understand and express information using both linguistic and nonlinguistic means, including sight, sound, smell, and social cues. Written language is simply a compressed and sometimes incomplete representation of the various things that we see, feel, and know as a part of the human experience.

That's why, large AI systems, trained exclusively on words and sentences from a large corpus of online text, can never reach the deep thinking, awareness, and expression that we see in humans.

Attempts to make the AI systems larger with more parameters and using bigger sets of data to train them are also bound to reach diminishing returns after a point.

So we really don't need to worry that artificial intelligence will one day develop self-awareness and turn into some evil sentient villain, as is often shown in Hollywood movies.

What we SHOULD BE WORRIED ABOUT is how these powerful and opaque AI models can be deployed in ill-conceived or malicious ways. 👇

AI systems trained on internet data will unfortunately inherit the biases that are embedded in online digital information. The internet is a rich source of data, but it is by no means exhaustive or inclusive. No matter how much we curate, any AI system trained on internet data absorbs its underlying biases, essentially amplifying the voices and opinions of communities that are louder and more expressive online and enjoy the privilege of digital access.

AI systems with the ability to create digital media and converse like humans, can also easily be manipulated and misused for spreading misinformation and running social engineering attacks, such as phishing emails or phone calls. These attacks, using AI technology, could be designed to trick vulnerable individuals into revealing sensitive information, such as login credentials or financial information, or to convince them to download malware.

AI systems working as digital butlers are great for offloading some of the cognitive load in our hectic lives, organizing our information feeds, and assisting us as personal productivity agents. But the same AI tools can also be hijacked and turned into a predatory weapon that easily takes advantage of our propensity to trust technology, a tendency that will be even more amplified with a technology like AI that mimics human traits.

As AI systems become more reliable and capable, we will increasingly delegate more of our decision-making to AI technology. This can be a slippery slope. It is one thing to use AI tools to assist with writing your emails, but quite another to start deploying it in complex domains that require nuanced human judgment. We have already seen terrible and biased outcomes when AI algorithms were deployed prematurely in the public domain for decision-making, without adequate human supervision.

The most concerning adaptation and deployment of AI systems by far will be in the field of law enforcement. Things may start small and seemingly harmless, with the slow introduction of AI algorithms used for profiling and predictive policing, meanwhile "allegedly friendly" police robot dogs start roaming our streets. Over time, as technologies start to converge, left unchecked, things could get quite dystopian for our society if decisions and law enforcement actions start being made in real-time by AI systems and autonomous robots deployed in the field.

CONCLUSION

It is no surprise that opinions are highly polarized on artificial intelligence.

On one hand, we are filled with a sense of wonder at the amazing capabilities of AI technology, but on the other hand, we are also filled with a sense of dread about what will happen if we give up control to intelligent, but opaque machines.

The choice may seem trivial right now, as we use ChatGPT for having fun conversations or we create a cool new picture by giving prompts to Midjourney. However, once AI technology proliferates other aspects of our lives that call for nuanced understanding and human judgment, we will need to be much more deliberate and conscious about how AI systems are informing our decisions.

The future of AI should be determined not just by technologists building better and faster models, but should also be shaped by overlaying considerations from the fields of ethics, philosophy, regulation, policy, legal, sociology, and more.

I have previously called for a change in how tech innovation is funded. This becomes even more urgent for the responsible development of a technology like AI, which needs more consideration than merely a short-sighted focus on commercial profitability and venture capital returns.